Open-source tools needed for the future of decentralized moderation

A small list of various open-source software that needs to exist to power the future of Trust & Safety for decentralized social networking software.

We're now seeing decentralized social networks reaching the mainstream, and as these social networks cross from fringe usage towards the mainstream, we face the need to solve tricky trust and safety issues on these platforms. At the same time, we're seeing new threats to trust and safety tooling that has existed for decades, with NCMEC facing funding cuts and the possibility for misuse of tools by authoritarian regimes.

I've worked on trust and safety tooling for Mastodon and related federated and decentralized social networks for a number of years now. This is the tooling I believe will be necessary to handle the current and future moderation and trust and safety issues for these networks and the greater trust and safety ecosystem.

Hash and Match Integrations

Whilst there is currently no legal requirement to proactively search for illegal or harmful content in the EU, having systems in place that can handle the hashing of media with perceptual hashing algorithms that are free and open-source, and then being able to compare those hashes and find matches in datasets of "known harmful content" is going to be essential in the future. We've already seen attacks against Lemmy where instances were flooded with AI Generated CSAM, now we're seeing similar happen against Mastodon where random people are @-mentioned with posts that contain CSAM, not to mention the whole load of fairly widely defederated instances that traffic in CSAM.

Although some laws refer to “child pornography”, pornography implies consent, which a child can never give.

Early developments in this area include the IFTAS Content Classification Service (IFTAS CCS), which had to be discontinued due to funding issues, and the Fedi-Safety project which focuses on Lemmy and uses Haidra Horde-Safety AI models to detect media that may be CSAM.

There's also the Hasher-Matcher-Actioner (HMA) project from Meta's ThreatExchange repository, where they've open-source hash and match as a service, powered by the family of open-source perceptual hashing algorithms developed at Meta.

I've personally done an early integration prototype showing Mastodon hooked up to HMA running as a service on my machine. This prototype was inspired by Juliet Shen's earlier prototype for AT Protocol integration with HMA. This would be something that could be run entirely within your own infrastructure. Another approach here would be to provide the matching as a service whilst keeping the hashing local, so only the hashes of media are transferred to a trusted third-party.

Content Review & Reporting Tooling

Whilst it's great to be able to detect pertinent matches to known CSAM, Non-Consensual Intimate Imagery (NCII), or Terrorist and Violent Extremist Content (TVEC), this then leads to the issue of safely reviewing and appropriately responding to these matches and flagging any false-positives.

When we designed IFTAS CCS, one of the decisions we consciously made was to not automatically create reports within Fediverse software, since we couldn't guarantee the safety of the moderators receiving these reports. Viewing this material can be deeply traumatizing, and needs to be handled with care. Whilst Mastodon does blur media by default when viewed in the moderation tools, it doesn't greyscale the media, and other Fediverse software doesn't follow best practices of blurring and greyscaling media for moderators.

Rather than reimplementing the content review and reporting tooling in every single project, we instead need common tooling for reviewing pertinent matches for harmful content which supports the right workflows to ensure it is handled as safely as possible.

The reporting side is of note here as well, as the most common question related to CSAM when moderators and administrators face it is: what do I need to do next?The answer to that question varies greatly depending on from where your service operates.

If you're a US entity, then you almost certainly need to file a report with NCMEC. If you're a German entity, then the Youth Protection Agency currently suggests immediately deleting the content and acting like it was never existed, because possession of CSAM is strictly illegal in Germany, even for the purpose of reporting it to law enforcement (unless you've a special liaison officer with the federal police).

So we need software that can help us capture the relevant details and file reports with child protection organizations where appropriate.

Reporting Services

As mentioned earlier, there isn't service through which you can programmatically report CSAM to law enforcement for investigation in Germany, in fact, no such service exists for the entirety of Europe. Sure, you can maybe file a report with Interpol, but the process isn't straight forwards.

Given this, I see there a need for an open source operating system for handling incoming reports of illegal and harmful content, allowing for coordination with law enforcement and handling the distribution of verified pertinent hashes back to the community. Think something like modern software for NCMEC, but open-source so any country can run a collection service.

Ideally with the EU potentially getting a clearing house such as NCMEC by legislative mandate, we could see them use open-source tooling to power that service, instead of them having to buy expensive proprietary software.

This service should have a modern API which supports the reporting pertinent media and metadata, as well as the distribution of pertinent media hashes to the community such that everyone can match against these new hashes.

This would likely take someone experienced in the law enforcement workflow at NCMEC to help design and build, but by building as open-source, we'd be resilient to the pressures of any individual government as to what we build and why.

(Also, it'd let us break free from the XML based API that NCMEC currently uses)

Sentry Services

When dealing with inauthentic content, such as spam, misinformation, and disinformation, one of the areas that frequently gets attention is how to identity potentially harmful accounts before they start posting.

Some techniques already employed on the Fediverse is the banning of sign-ups for well known temporary inbox services, using the list of services from the Disposable Email Domains project. There is almost certainly more that can be done here, combining IP Address reputation, common username patterns, etc.

This sort of service would ideally be self-hostable and directly integrate with projects to stop malicious activity in its tracks.

Whilst my own project, FIRES, can be used to distribute such information, there's still the matter of integrating these.

Community Notes & Annotation Services

Following on, for tackling misinformation and disinformation, having open-source software that can power community notes and annotations of content from trusted sources will be necessary. One possible path forwards is to use the Web Annotations Protocol to power such services. There's also things like the Schema.org ClaimReview schema, which underpins Google's Fact Check project.

Additionally, there's a lot more information compiled under the ticket for this in the ActivityPub Trust & Safety Taskforce's issue tracker. I've also started developing a prototype Web Annotations service to experiment with how this could work for the fediverse.

Spam / Ham Services

Currently spam remains a persistent annoyance on the Fediverse, whilst Pixelfed has implemented a naive-bayes based spam/ham classification model, this is deeply integrated into Pixelfed, and cannot be easily reused by non-PHP projects.

There's also work from Marc, a PhD student, on spam classification as a service. It's written to provide a simple API through which to integrate into other fediverse software, however, it would still need a user interface and those integrations to be created to be usable by moderators.

Another route would be something like tying into Akismet or developing open-source software that is similar. There's also some research on collaborative models where models can be exchanged with others without leaking training content (which could include Personally Identifiable Information)

Automod Tooling

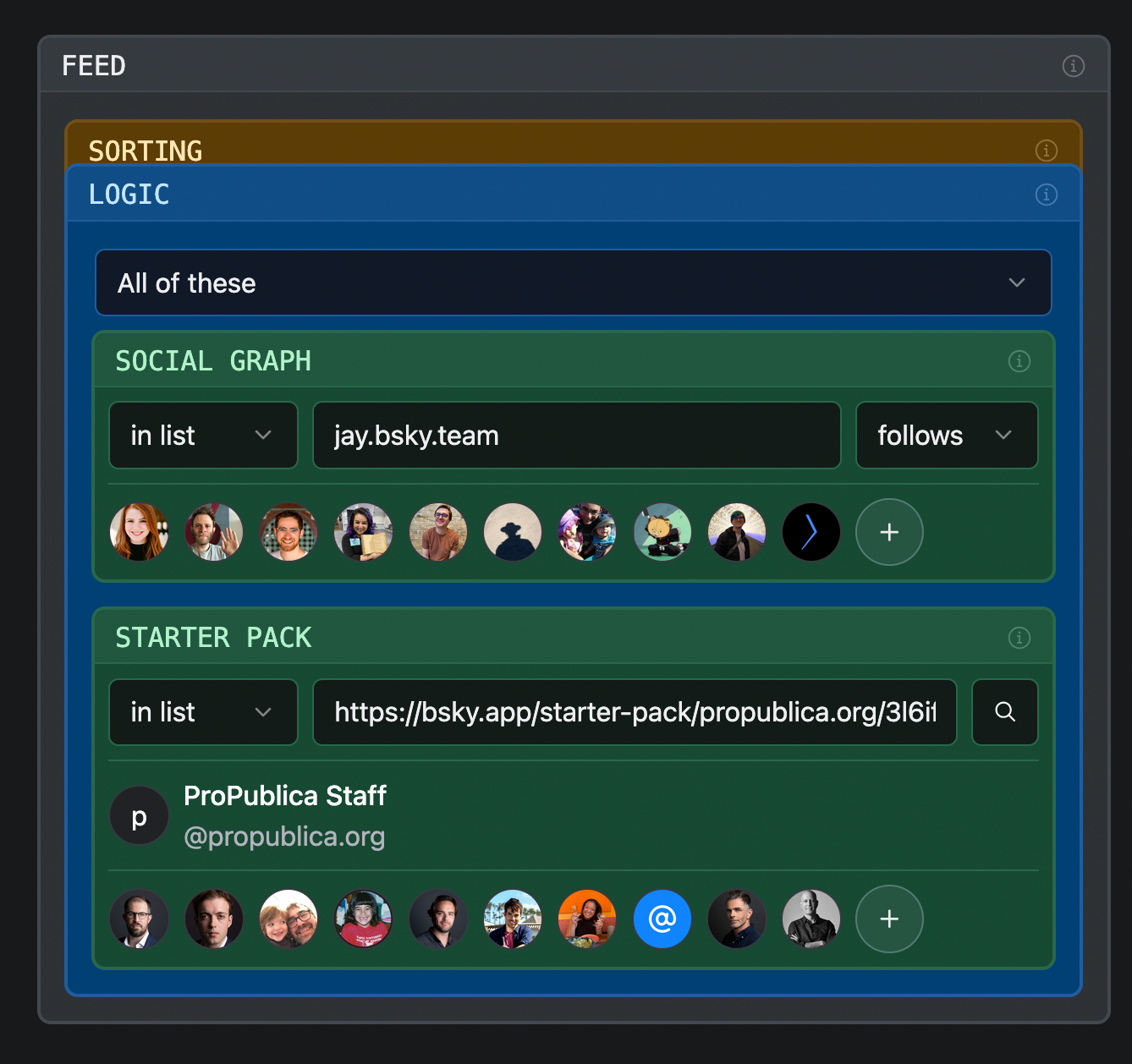

One other area that could be beneficial is the implementation of generic automod or rule-based automated moderation tools. Bluesky has open-sourced their Indigo automod tool, and it'd probably be possible to build similar as a more generic service. Also in this area is the SQRL project, however, it lacks the moderator UI for authoring rules and deploying them.

User-interface wise, we could see UI's similar to that of Graze, but applied to moderating content, effectively if this then that. Graze's core technology is also open-source.

Probably a decade ago, I implemented similar UI for an ad-tech firm that allowed composing rules to match against arbitrary JSON datasets, which under the hood was powered by a ruby implementation of the mongodb query language. Whilst today I know I wouldn't use ruby and a whole bunch of nested proc's for it, the concept is still probably a good way to create and execute rules using a simple query builder-style UI.

These tools also come with the problem of needing to test rule evaluation without actually apply outcomes, as to give a feedback loop to ensure that rules are actually matching what they should.

Standalone Moderation Tools

Currently in the Fediverse, it's very common to see moderation tools for triaging and responding to reports be recreated over and over again. There's room to develop a third-party moderation tool that supports more advanced workflows like queue management & rules to filter reports into given queues. This software should support reports on multiple entity types (Accounts, Posts, Media, Links/Link Previews, Hashtags, etc), and also help collate reports about the same content.

At the moment in Mastodon it's technically only possible to report an account, whilst moderators can take action on individual posts, the report is about the account, and the posts are just extra information. You cannot report a link preview or a hashtag, and these can be common vectors for abuse (phishing / harmful media in link previews and harmful or offensive hashtags).

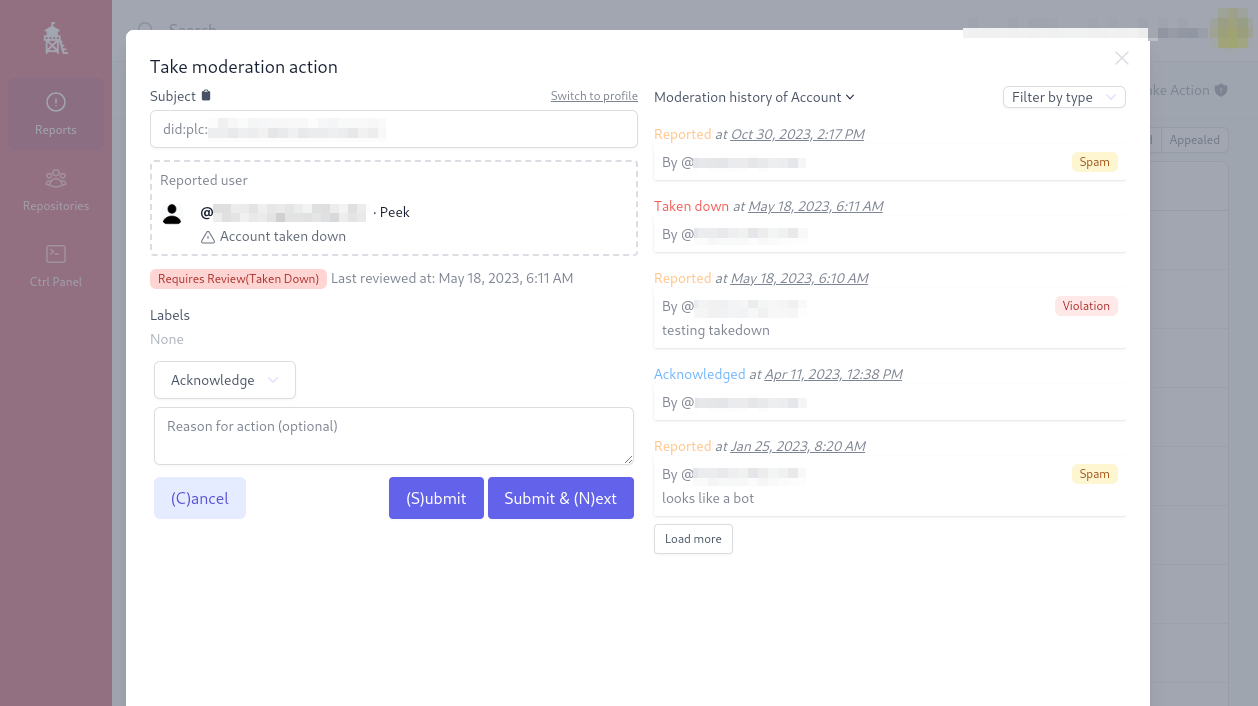

In Bluesky, their ozone project could be the start of such a tool, though as it stands, it's deeply integrated with the AT Protocol specifics and the user interface isn't the best designed for moderator ease of use.

The Letterbook Project is doing a lot of interesting research into moderation tooling, but from the context of building better moderation tools for their own software project.

One could imagine an open-source alternative to proprietary tools like Hive AI, Tremau, or similar moderation as platforms, which can adapt to different platforms like Mastodon and Bluesky, and provide a unified experience for moderators. Perhaps it could also support multi-tenancy, so you can moderator across multiple different services from the same comfortable user interface.

This sort of tool was the original idea behind me acquiring the FediMod name, because I thought we were wasting significant resources having to all build the same UIs and tools over again.

An additional idea here is if these tools allowed capturing a report in a safe way to support training future moderators, such that for training, you could re-play the report but no action would actually take effect.

This list isn't exhaustive

There's certainly a whole lot more tooling than is on this list, and I've probably forgotten some notable ones, however, that doesn't mean that tooling shouldn't be built, it just means I didn't think of it whilst writing this post.

If you’d like to support the work I’m doing, you can at https://support.thisismissem.social!